This season marks 125 years since pitchers first launched their offerings from the current distance of 60½ feet from home plate. That’s 10½ feet or more than 20% further than before the 1893 season, a massive change that launched the 1890s “ultra-live ball” era but also introduced the more lasting change of pitchers who were used fairly frequently in relief roles. More after the jump. This new breed of pitcher was not a relief specialist by any means, but it was a recognizable start to regular employment of some starting pitchers in relief roles. Even after the lively ball of the 1890s and early 1900s gave way to the following dead ball era, use of relievers continued to expand as complete games began their inexorable descent to today’s miniscule levels. But, before we get into that, here are some of the immediate effects resulting from the increased pitching distance.

- Scoring increased from 1892 to 1893 by 1.47 runs to 6.57 per team game, then increased a further 0.81 runs in 1894. The five seasons from 1893 to 1897 remain the five highest scoring seasons in the 125 years since 1893.

- Batting average in 1893 increased by 35 points from the year before, from .245 to .280, and then jumped a further 29 points in 1894 when the league slugged at .435, the second highest rate of the past 125 seasons (though only the 51st highest ISO season, with 2017 topping that list)

- Home runs jumped 26% from 1892 to 1893, and a further 34% from 1893 to 1894.

- League WHIP increased by .246 from 1892 to 1893, and jumped again by .122 in 1894. The four seasons from 1893 to 1896 remain the four highest WHIP seasons of the past 125 years.

- Strikeouts fell by more than one-third from 1892 to 1893, dropping from 3.24 per team game to just 2.13. Walks jumped 16.7% to almost 4 per team game.

- Perhaps surprisingly, stolen bases held steady from 1892 to 1893, but that level (1.75 per team game) was already down sharply from 1887-90 when better than two stolen bases were recorded per team game. Nonetheless, the 1893 to 1897 seasons remain the five highest in stolen bases of the past 125 years.

I think you get the idea. An already high scoring environment (by modern era standards) became hotter still, reaching levels not seen since. Pitchers struggled to adjust to the new pitching distance and batters took advantage.

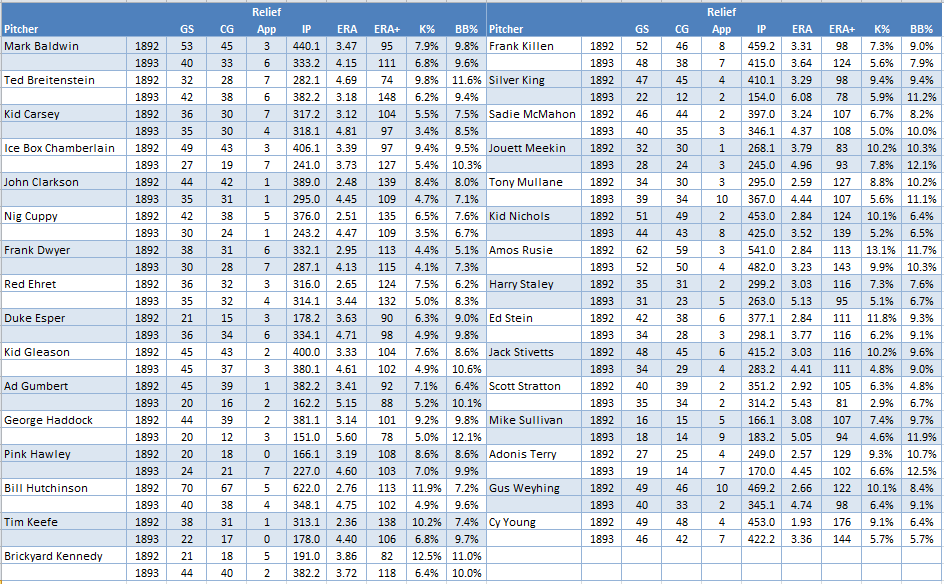

So, who were the unfortunate moundsmen thrust into the role of lab rat as baseball undertook this radical experiment? These are the 31 pitchers who recorded a qualified season in both 1892 and 1893.

- Of these 31 pitchers, only two (Breitenstein and Kennedy) posted a better ERA in 1893, despite 13 of the 31 recording a better ERA+

- All 31 pitchers recorded a lower strikeout rate in 1893 than in 1892, and 20 of 31 recorded a higher walk rate

- 23 of the 31 pitchers saw their percentage of starts completed fall from 1892 to 1893, while 20 of 31 made more relief appearances in the latter season

- 24 of 31 pitchers saw their innings totals drop from 1892 to 1893, and 21 of 31 recorded a drop in IP per game

Most of these pitchers ultimately adapted to the new pitching distance, with over half (16) recording 1000+ IP from 1893 and only two of those (Karsey, Weyhing) with an ERA+ below 100. Those who struggled to adapt included:

- Kid Gleason actually had a slightly better ERA+ from 1893 than before, but nonetheless switched to second base in 1895.

- HOFer Tim Keefe was released before the end of the 1893 season due to ineffectiveness, but was likely close to the end of his career anyway. Ditto for Tony Mullane, who stuck around for one more forgettable season (155 IP, 6.59 ERA) in 1894, though he continued pitching in the minors until 1902 at age 43.

- George Haddock had recorded 34-11 and 29-13 seasons in 1891 and 1892, but could manage only a 77 ERA+ in 1893-94 at age 26-27 and never pitched professionally again (maybe hitters were no longer intimidated by the cross-eyed stare on display in his B-R page picture).

- Silver King won 30+ games for four straight seasons (1887-90) with 147 ERA+ pitching in the AA and Players League, but was less effective in the NL the next two seasons, a trend which worsened from 1893 with only a 90 ERA+ in 453 IP to the end of his career.

- Scott Stratton saw his pre-1893 ERA+ of 112 slide to 79 in 516 IP to close out his career. Gus Weyhing had a similar ERA+ drop, from 118 to 86, in 1000+ IP on both sides of the 1893 demarcation.

- Don’t know if it had anything to do with the new pitching distance, but Pink Hawley hit 20+ batters for a record eight straight years starting in 1893, with the aforementioned Gus Weyhing in second with six seasons (but four of those were before 1893); Weyhing and Hawley rank 1st and 3rd in career HBP, bracketing Chick Fraser, a rookie teammate of Weyhing’s on the 1896 Colonels.

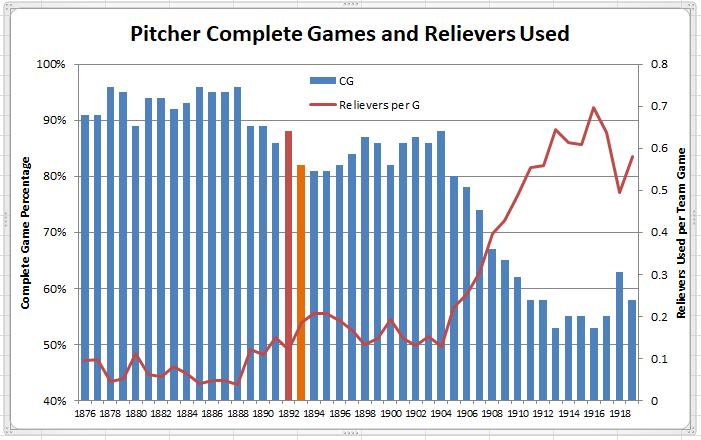

The above results are reflected in a decline in complete game percentage from 1892 to 1893, from 88% to 82%, as shown below. At the same time, reliever usage, which was already on the rise, increased over 35%, from a pre-1893 high of 0.150 per team game in 1891 to 0.207 in 1894 and 1895.

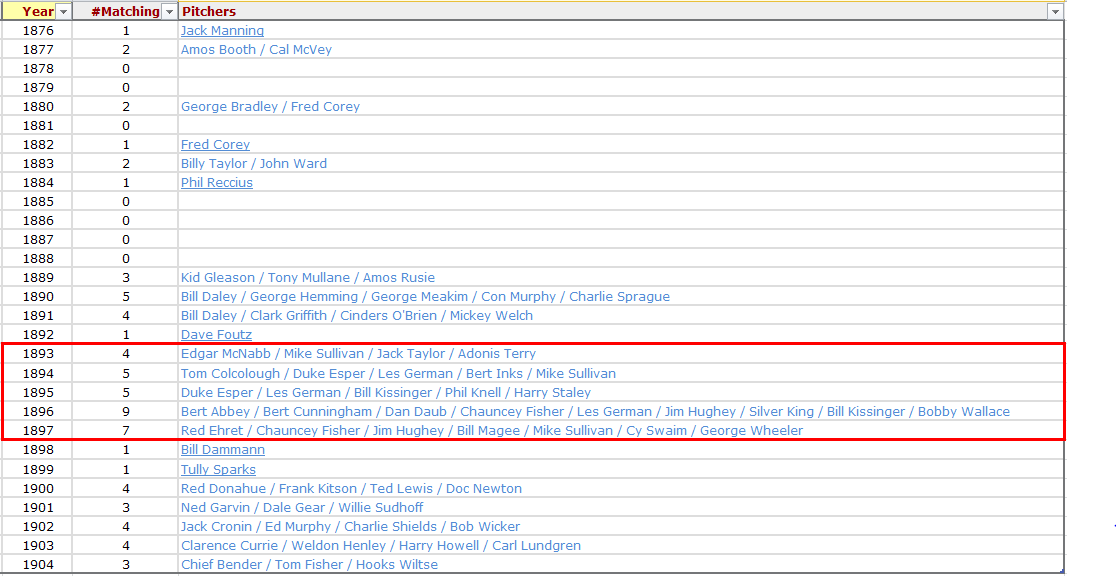

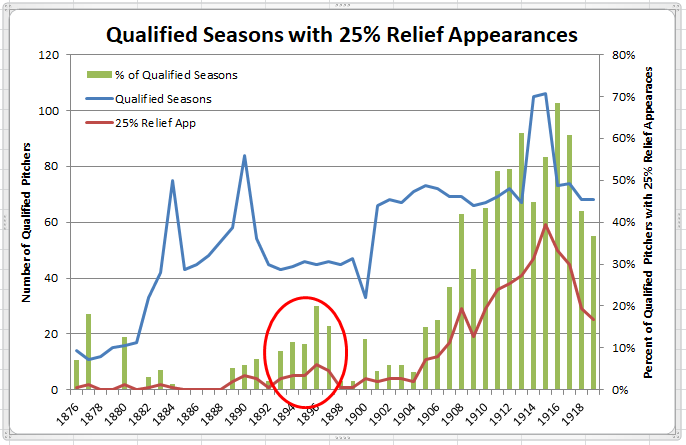

What piqued my interest, though, was how those extra relief appearances were doled out. The list below shows the pitchers each year with a qualified season that included at least 25% of appearances in relief.

As is evident from the above list, there were virtually no such pitchers before the 1889 season when runs per game jumped by over a run from 1888 levels. However, by the time the Players League and American Association had folded, there was again just one such pitcher in the 1892 NL, similar to the experience before 1889. But, look what happened over the next five years (highlighted) in a 12 team league, with record numbers of such pitchers each year, albeit still at levels below one such pitcher per team.

As is evident from the above list, there were virtually no such pitchers before the 1889 season when runs per game jumped by over a run from 1888 levels. However, by the time the Players League and American Association had folded, there was again just one such pitcher in the 1892 NL, similar to the experience before 1889. But, look what happened over the next five years (highlighted) in a 12 team league, with record numbers of such pitchers each year, albeit still at levels below one such pitcher per team.

When runs per game started to moderate in 1898, complete game percentage edged up again and the numbers of these 25% relief pitchers declined. But only briefly; when the season extended to 154 games in 1905, there were 11 such pitchers, climbing to 29 in 1908, 41 in 1913, and 50 in 1916, the last representing more than three such pitchers per team.

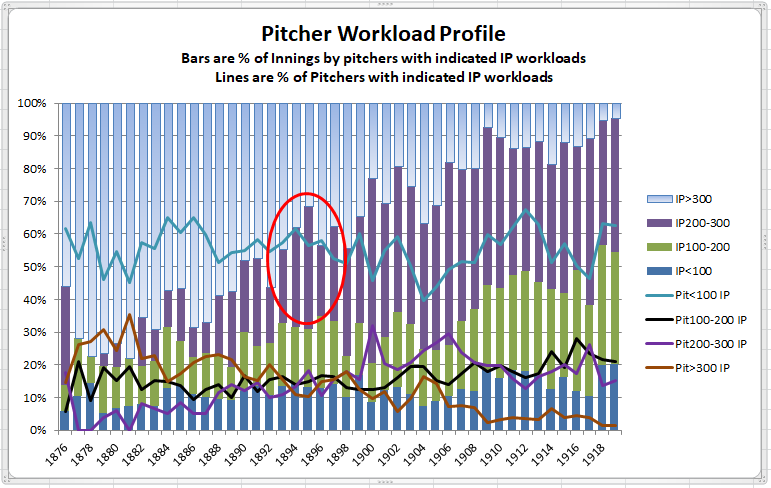

Here are those results graphically, with the 1893-97 period circled. The green bars in this period represent 10%-20% of all qualified pitching seasons having 25% or more of appearances in relief, the first sustained period of pitchers having that level of relief responsibility.

You might be wondering whether there were any relief pitchers who were not just starters picking up their teammates. The answer is not really. Bumping up the relief role to 40% of appearances and dropping the workload threshold to 100 IP yields 1908 as the first season with a sizable number, with 16 that year (an average of one per team), compared to the previous MLB high of only four.

The exclusive reliever on a roster really didn’t exist at all; of the 851 seasons from 1876 to 1919 with zero starts, over 97% were of fewer the 20 IP, with a high prior to 1893 of only 23.1 IP, a mark that would stand until 1908. Bumping that up to a 5 start maximum and 50+ IP yields only 126 seasons over that period, just 36 of which came before 1908.

The other aspect of pitcher usage that changed significantly in the 1893-97 period was in the innings workload by the workhorses, those pitchers with seasons of 300+ IP. As shown below, prior to 1893, the smallest percentage of total innings pitched by these workhorses was just below 50%, in 1890 and 1891.

Those workhorse pitchers were under that previous minimum each season from 1893 to 1897 (circled) and not by a little, with all of those seasons below 45% and three below 40%. That trend would escalate rapidly, with workhorse workload dropping below 25% in 1900, and below 10% in 1909.

As I may have hinted, I hope that HHS contributors will be interested in using this site to help strengthen our understanding of and interest in 19th century base-ball, particularly for the period 1893-1900, when the game had taken almost all important aspects of its modern form (excepting the foul strike), but was hampered by the absence of what we tend to see as the appropriate league structure, and by the fact that year numbers begin with 18–, which seems quaint.

In opening up discussion on 19th century ball, Doug has surprised me by his choice of topic — I never would have thought of CG/relief pitching as a way into the era — but it looks like a great choice, and I’m going to take some time to tool up and see whether I can find things worthwhile to contribute.

But in the meantime, I want to add two comments: one minor technical matter — the “%” markers need to come off the legend items on the right. Someone as slow as I am can be confused by it.

The other is a perpetual question for me, and I’d like to know whether anyone simply knows the answer. Ever since I first learned about the 1893 pitching box change and saw league hitting and pitching figures, which is now almost fifty years ago, I have been unable to account for why the greatest hitting season in baseball history was 1894 and not 1893. It’s obvious why run scored would jump about 30% when the box became the mound and was moved back ten feet. What a difficult adjustment for pitchers, and how much more time batters had to react to pitches! But why should runs jump another 12% the following year, when pitchers had gained a year’s experience to compensate for the sudden change. In 1895, batting levels reverted to what they had been in 1893 and then continue to subside, making 1894 a permanent outlier. Does anyone have an explanation for why 1894, not 1893, was the 19th century Year of the Hitter?

One final comment: The change of 1893 was a dramatic one, but perhaps not as lasting in its statistical effects as the 1920 change to the Lively Ball. And just looking at Doug’s charts here, it seems that the years following 1904, which lead into the true Dead Ball era, were, in terms of pitching, at least as dramatic as the 1893 changes, and in some respects much more so. If we want to demarcate the major periods of baseball history, I wonder which dividing lines have been, in fact, the most dramatic, and whether the 1892/93 divide, which for me is the most fundamental one, was, in fact, the most dramatic. I think this is an important question, given how baseball history has persistently tended to recognize 1901 as the year that initiates the “modern” era, as if league structure (or perhaps the foul strike rule) were the heart of baseball.

Uh oh: “the “%” markers need to come off the legend items on the right” . . . what does that mean? It means I forgot to specify I was referring to the final chart,

Thanks for the feedback, epm.

I’ve updated the legend on the last chart, to hopefully make it clearer. Also, to your point about changes in reliever usage after 1904, I added another data series to the first chart that shows that quite dramatically.

As to why batters had their way in 1894 even more than in 1893, possibly it was because of batters adapting their approach to be even more aggressive about exploiting their new advantages. Put another way, had batters changed their approach (i.e. adapted) faster in 1893, that year might have looked more like 1894. But, adaptation, even to more favorable circumstances, still takes time.

Thanks for the changes, Doug. I’ve often thought the answer on 1894 could be as you say, but I see no reason why batters, who certainly adapted quickly in 1893, would continue to improve their adaptation while pitchers remained unable to adjust, until suddenly the dynamic reversed a year later. It makes sense only if you consider one side of the equation and not the other.

So I’m hoping there is some data point about 1894 that I simply have never discovered — a temporary change in the ball; some odd field ground rules; whatever . . . One thing I’m considering doing is to go through the Retrosheet daily scores for those years, so we could see whether the apparent jump in runs from ’93 to ’94 may not, in fact, have been something that built up and subsided in a smooth curve over ’93-’95. I’d love to have those results, but it has occurred to me that the process of compiling the day-by-day data may not be as thrilling as it first appears, and that there are alternative life activities I may need to prioritize, such as naps and snacks . . .

While I don’t share the drive to research 1800s players, I do share your general sentiment that the originality of analysis on here is much more relevant in a historical perspective. The best work here, like this piece by Doug, covers historical contexts and changes in baseball over time. Future player projection and comparative analysis of guys vying for MLB active rosters is sexier, and sometimes even funded (ha!), but in some significant ways it relies on deeper dives into historical context like this one.

Doug, I read every word. I process it. Your work not only helps me understand and deepen my affection for the game of Baseball, it helps keep the statistical analysis part of my brain well fed and healthy. I regret deeply that I can’t give you a proper reply worthy of the originality and significance of your research. At least know that the breadth of my responses to your posts over the years is not reflective of the high regard in which I hold what I am replying to.

Breitenstein is an interesting guy. I think I glossed over him once when talking about Nap Rucker – Rucker might be the best pitcher ever with exactly a .500 career record, and Breitenstein has a good argument for best with an under .500 record. (The other two are Jack Powell and Bobo Newsom – or Bob Friend, if you prefer someone a bit more modern)

He’s probably most well-known nowadays for throwing two no-hitters, including one in his first career start. He had pitched five games in relief, so he doesn’t quite match Bumpus Jones or Bobo Holloman. His no-no was the last in the American Association, since the AA folded after 1891.

He was part of the “pretzel battery” with fellow German-American Heinie Peitz. Talking about this period in history, the period from the mound distance change in 1893 to the birth of the AL as a 2nd major league, Breitenstein has a good argument for 3rd or 4th best pitcher in that period. Cy Young and Kid Nichols are clearly the two best. After that, you’ve got Breitenstein, Amos Rusie, and Clark Griffith. Breitenstein was more valuable by WAR, but Rusie missed time due to various reasons – I remember from an earlier discussion he took off an entire season due to fighting with his insane owner Andrew Friedman. Rusie was certainly much more valuable on a per-inning basis, and was the premier strikeout pitcher of his time.

He also had a very successful minor league career – after he retired from the NL, he pitched in the Southern Association, mostly for the New Orleans Pelicans, going 165-92 with a 2.02 ERA.

Not a Hall of Famer, but a guy worth remembering.

CC, Breitenstein’s 1893 season is like an exaggerated Dick Farrell 1962 or Ned Garver 1950 — 11.3 WAR with a losing record! A spectacular season. I’d go with the Hoosier Thunderbolt over Breitenstein for the balance of the era, but that’s no knock on Breitenstein. (Freedman did more than knock Rusie out of the 1896 season; his demand to cut Rusie’s salary effectively ended Rusie’s career at the age of 27.)

It would be interesting to pursue your idea of finding the supreme losing pitcher of all time. You might add Murray Dickson to your list, especially since the War may have pulled him out of two surefire winning seasons, given his youth, momentum, and team, that probably would have put him over .500 for his career.

Bumpus was unique. Bobo was like Breitenstein: he’d had several (awful) relief outings before his no-no debut. Bill Veeck claimed he told Marty Marion to start Bobo, who was whining about not getting a chance, so that he could send him down to the minors with a clear conscience; he regarded the no-hitter as a curse.

Breitenstein’s 1893 season ranks second in WAR in a losing season, trailing only the 18.0 WAR put up by Jim Devlin in 1876. Devlin recorded a 30-35 record with a 1.56 ERA (168 ERA+) in 622 IP for the Louisville Grays (who finished the season at 30-36). The Grays’ defense looks a little suspect as Devlin allowed 201 unearned runs to go with the 108 of the earned variety that he gave up.

The top such seasons of the live ball era belong to Jon Matlack (9.1 WAR, 13-15) for the ’74 Mets and Phil Niekro (8.9, 16-20) for the ’77 Braves. The career list is headed by Jack Powell (56.0 WAR, 245-254), Breitenstein (52.1, 160-170) and Bobo (51.7, 211-222).

Doug, It wasn’t the Louisville defense alone that was suspect. Devlin and others were throwing games. They were, in turn, thrown out of baseball, and the club was disbanded.

What a surprise to see Matlack’s name on this list. Nothing in that ’74 season suggests a stratospheric WAR. It looks to me as though he’s getting enormous credit for the team’s terrible defense.

I suspect, beyond poor Mets defense, the biggest contributor to Matlack’s big WAR was the estimation of what an average pitcher would have given up in the same innings against the same teams. In Matlack’s case, it was 4.72 RA/9 compared to the to 2.78 that he actually gave up, a huge difference of almost two runs. His next largest gap was 1.1 runs in 1978 when he garnered his second highest WAR total of 6.4.

You’re right, Doug. That does seem to be the case. It’s hard for me to see the basis of the extremity of that calculation: it’s basically a strength-of-schedule issue, and I don’t see Matlack up against a exceptionally unbalanced set of opponents. It’s true he didn’t get to pitch against his own, weak offensive team, and he faced above-average run-producing teams a bit more than normal on top of that. But the two big outlier run producing teams were LA (+0.78) and Cincinnati (+0.61), and he only started against the three times each (out of 34 starts), while he started four times against the offensive weak sister, SD (-0.81). His strength of schedule simply isn’t out of normal range, and his park factors come out to about 99: almost average.

So I think that what we’re seeing in that projected 4.72 RA/9 figure is mostly a repeat of the defensive weakness of the Mets, since the figure takes account of both park factors and defense. That is, since RA/9def is built into RA/9avg, and the Mets RA/9def is calculated as an outlier, which park factors and strength of schedule are not, it’s probably RA/9def causing the outlier effect in RA/9avg.

I did a very crude estimation, calculating game-by-game Matlack’s runs allowed above or below what his opponents scored that season, weighted by his innings pitched. The result was 1.46 runs better which. when added to the Mets’ 0.38 run defensive deficit, gets Matlack to 1.84, not far from the 1.94 runs better that B-R credits him with.

Matlack also did have a slightly tougher than usual schedule, with a 4.23 avg R/G of his opponents compared to league avg of 4.15, or 4.21 excl. the Mets. He faced the top three offensive teams (Dodgers, Reds, Pirates) 11 times in 34 starts, going 1.53 runs better in those games, better than he did overall.

Perhaps the 0.10 divergence between your results and B-R was just park factors, Doug. I’m sure you have it right: I too estimated a slightly tougher than expected schedule for Matlack. The fact that this adds up to 9.1 WAR still seems very strange to me.

I guess I’ll look for the time to go through his season game-by-game, looking at the contexts for his pitching. If I can get the 9.1 figure to make sense to me — I mean after-the-fact intuitive sense, rather than just an understanding of how the calculation was done — then perhaps I’ll be able to make better sense of Rick Reuschel’s 1977 9.4 WAR figure as well.

I ran the PI for pitchers from 1871 to date with 100+ GS, W-L% equal to or less than .500 and sorted by ERA+. Here are the pitchers with ERA+ equal to 114 or higher.

ERA+ W-L% GS Player

150 ….. 0.486 ….. 153 ….. Jim Devlin

124 ….. 0.374 ….. 158 ….. Ned Garvin (not to be confused with Ned Garver)

122 ….. 0.496 ….. 132 ….. Johnny Rigney

121 ….. 0.484 ….. 226 ….. Jim Scott

119 ….. 0.485 ….. 272 ….. Thornton Lee

119 ….. 0.500 ….. 274 ….. Nap Rucker

118 ….. 0.456 ….. 101 ….. Justin Thompson

117 ….. 0.500 ….. 111 ….. Drew Pomeranz

117 ….. 0.477 ….. 241 ….. Toad Ramsey

116 ….. 0.497 ….. 162 ….. Doc McJames

116 ….. 0.492 ….. 219 ….. Frank Sullivan

115 ….. 0.500 ….. 183 ….. Jose Quintana

115 ….. 0.500 ….. 211 ….. Hiroki Kuroda

114 ….. 0.459 ….. 108 ….. Ivy Andrews

114 ….. 0.481 ….. 161 ….. Johnny Niggeling

114 ….. 0.498 ….. 318 ….. Jon Matlack

I think it should be noted that several of those pitchers were active pre-1901, in the days of no gloves or tiny gloves, and official scorers’ decisions of error or hit were questionable.

You mean official scorers’ decisions aren’t questionable any longer? To me, scorers have become much more lenient in the past 20 years, giving the fielder the benefit of the doubt on hard hit balls and awarding hits where errors would have been more likely 30 or 40 years. Strictly a perception thing, but it’s sure seemed that way to me.

I am not saying that official scorers’ are no longer questionable, just that it was more difficult to make judgments prior to 1900. Concerning your comment below about muddy fields, I remember reading in the Charlton Chronology that there were games played with actual puddles on the playing field, usually in the outfield.

I was being half facetious there, Richard. But, I do think scorers have become kinder to hitters and defenders (and tougher on pitchers) in recent years.

Puddles in the outfield would definitely help fielders keep the ball in front of them; cuts down on those annoying gappers that turn into triples and IPHRs.

Your many references to the Charlton Chronology have convinced me to buy a copy. They’re available on Amazon (probably various versions), mostly used, but a few new ones starting from $21 incl. shipping.

It may be sign of second childhood, but I’ve followed up on my interest in the counter-intuitive rise of hitting in 1894, a year after we might expect hitters to have their peak advantage over pitchers, in the immediate wake of the pitching distance. Using Retrosheet line/game scores, I’ve calculated the R/G (both teams) for each month from September 1893 through June of 1895 (and also for early 1893). The results do seem to show something a odd:

1893

Apr. 11.6 (only 4 dates/16 games)

May 13.3 (Apr./May average: 13.2)

…..

Sept. 12.5

…..

1894

Apr. 12.3 (11 dates/50 games)

May 12.0

June 14.9

July 16.2

Aug. 16.3

Sept. 14.5

…..

1895

Apr. 14.8 (10 dates/45 games)

May 14.1 (Apr/May average: 14.3)

June 12.3

The question was whether there was a smooth curve showing a gradual increase/decrease in hitting over the period late ’93 to early ’95, or whether there was a sharp deviation, which might suggest the introduction of some unknown factor.

If hitters were figuring out how to get even more leverage over pitchers beyond the initial boost that the April ’93 change in distance provided, one would expect a gradual process, with pitchers also gradually figuring out how to counter it, which was evident by ’95.

What we see is this: Over the initial weeks of ’93, in the wake of the distance change, runs rose to 13.2/G (the 1892 figure had been about 10.2). By the end of the season, pitchers seemed to have recovered a bit, with the last month’s figure at 12.5. (There were 159 games in Apr/May; 146 in Sept, so the samples are pretty close.)

1894, the year of the hitting explosion, actually began with the 1893 trend continuing, and pitchers battling down the R/G to 12.0 in May. But then, as you see, the explosion suddenly kicks in — we actually see it start a few days before Decoration Day (as it was then known). If you divide the season into two parts — April 19-May 31 and June 1-Sept 30 — then the R/G rates are:

April-May: 12.1

June-Sept: 15.5

The line is pretty sharply demarcated, and the increment in R/G is 28% (!). This does not look like hitters figuring out how to handle a 60’6″ distance a season after the change was made — a change they exploited from the start. It looks like some other change in playing conditions: Factor X. I have no idea what Factor X could be — I’ve located no mention of anything explaining the ’94 rise in runs other than the ’93 pitching change (which begs the question), and no mention of any mid-season change in productivity. But what we see is a sharp rise in scoring in June ’94, reaching an absurdly high plateau in July and August, subsiding back to the June level in September and early ’95, and then returning to 1893 levels (which is what the overall 1895 season produced).

Whatever inflated runs in mid-’94, it’s certainly possible that the pattern of staged reversal could simply represent a “learning curve” on the part of the pitchers, one that kicks in after a couple of months of inflated scoring. But the long plateau of 1893-May ’94 that precedes the inflation makes the hitters’ sudden explosion look like anything but a learning curve.

That’s as far as I can get. If anyone has knowledge or an idea about this unusual phenomenon, I’d appreciate very much learning about it. This is a little off Doug’s topic of the rise of relief pitching with the 60’6″ distance, but I doubt there will be a better opportunity for discussing this.

I realize this is an insane shot in the dark, but I wonder if the Panic of 1893 (which went on for some time) impacted rosters. And, one more thing that might have been mentioned above. In February of 1895, the league reduced the size of gloves and permitted the dirtying of balls.

Mike, That fact about the rule changes of 1895 is interesting, though the timing may not quite solve this problem. Where did you find it? I use the Baseball Almanac to track rule changes and it doesn’t mention those.

As for the Panic of 1893, I think you’re exactly right: it’s an insane shot in the dark. If someone ever casts light on this mystery we’ll be able to take a look and see if you hit anything.

I excel in insane shots in the dark. Y’all who have been patient with me over the last several years know that.

I thought the Panic, given how serious it was, might have caused teams to be folded or stripped down, with perhaps players not being paid because owners were strapped and attendance would be down. There could have been a bleed-off with the one year creation of the Union Association as well.

The rule came from doing a down and dirty on team liquidations in 1893/4/5 where I came across a reference to Cap Anson pushing for a rule change on glove sizes that was adopted Feb 27, 1895..

Also, I checked Baseball Prospectus. There were several rule changes in that period, including size and shape of bats, different treatment of foul bunts and foul tips. I’m not sure how that impacts scoring, but perhaps there’s a cumulative impact.

Mike, I don’t think there was any effect on rosters. Richter’s history of baseball (1914) begins its account of the 1894 season by saying, “The season was uneventful, but marked the gradual return of prosperity with peace” (just what we keep hoping for nowadays!). Francis Richter was an observer throughout the late 19th century, and I’d expect him to have noted extreme financial pressures, which he did in his accounts of league struggles and baseball wars.

The UA was actually a decade earlier: it was the 1890 Players League that had most recently disrupted things, but the major hit was to the American Association, which folded in 1891. The NL was sitting pretty for the rest of the decade, with a monopoly. League membership stayed stable till 1899, so I’m puzzled about your reference to team liquidations.

The 1894 rule changes on foul bunts (now strikes) would certainly not have worked to increase scoring that year; the 1895 rule on caught two-strike foul tips would have had some impact to restrain scoring in that year. However, there’s no reason an effect would have been delayed till June (unless that’s when the rule was instituted . . .?).

I think Richter is wrong on the history of the Panic of 1893–it wasn’t over in 1894, when unemployment peaked. Then the Pullman Strike in mid 1894, over reduced wages but same rents in company towns, and a Wall Street-aided bailout of the government in Feb 1895. Here’s an interesting link: https://www.jpmorgan.com/country/US/en/jpmorgan/about/history/month/feb

Mike, Richter has nothing to say about the Panic, and, of course, you’re correct that the effects were still being felt in 1894 and beyond, just like the 2008 recession’s impact lasted multiple years. Richter’s just talking about the National League, and since his accounts of baseball history do include economic impacts on the game, I think what we can conclude from his comments is that he observed none that he felt merited mention from the Panic in 1894 (he also does not mention the Panic in his brief account of the 1893 season).

I suspect that histories of baseball that discuss the 2009 season will probably not comment on the recession, since it did not have a demonstrable impact on the game (at least that I know of; the Madoff scandal probably had greater impact, given the effect on the Mets). It doesn’t mean the recession wasn’t a broad social factor, which we well know it was.

Here are the NL (=MLB) attendance figures from 1892 through 1895, which indicate the undetectable impact of the Panic (though growth did slow somewhat in 1894):

1892: 1.69m

1893: 2.17m (+28%)

1894: 2.35m (+8%)

1895: 2.80m (+19%)

EPM, here’s a link to a site that talks about 19th Century equipment. I don’t have a way of judging its credibility:

http://www.19cbaseball.com/equipment.html

Nice article. Notice that the 1893 rule changes eliminated the flattened bat preferred by hitters, perhaps to balance somewhat the effects of the pitching change.

That rule changing to a rounded bat might also explain why 1894 was more favorable to hitters than 1893. By the latter year, batters would have more fully adjusted to their new lumber and its effects on their swings.

Perhaps. But, as with the “learning curve” explanation vis a vis pitching, why did they all figure out how to handle their new 1893 bats together at the end of May 1894? (And why did that sudden burst of learning subside a year later? The “learning curve” model doesn’t really work on the pitchers’ end — how do you reverse the effects of batters mastering use of the rounded bat?)

Fully round bats were not actually novelties; the flat-sided bat was only allowed 1885-1892 — all 1893 players would presumably have learned to play the game with fully rounded bats, and mid- and late-career players would have used them when they first came up to MLB.

All this business about flattened bats leaves us with a bit of a sticky wicket in figuring things out, ay?

I think Bells’ comment below is worth noting. We may be searching for a specific reason for something that happened as a result of many factors, not least among them chance.

Just a small note, Doug: “chance” is not a reason: it’s just a term. “Chance” means we are unable to determine the reason or reasons, so we use the term to stand in for it/them.

Roster sizes did appear to shrink in 1893-94. These are the number of players, league-wide, with fewer than 200 PA, excl. pitchers.

1892 – 64

1893 – 57

1894 – 53

1895 – 82

1896 – 67

1897 – 62

1898 – 94

1899 – 86

But, didn’t see the same kind of result for pitchers. For example, number of pitchers with fewer than 30 IP.

1892 – 36

1893 – 31

1894 – 49

1895 – 33

1896 – 36

1897 – 25

1898 – 36

1899 – 37

Unless the high number of such pitchers in 1894 was a reflection of bringing up emergency replacements when needed and then letting them go.

The 1894 roster figures are interesting, Doug, especially on the pitcher side. I’m going to explore this further — it seems likely that the roster and scoring issues are related.

This might be an explanation. 11 of those 49 pitchers in 1894 were primarily position players that year, which would make sense in a season with offenses running wild and likely lots of blowouts.

Don’t know if there was similar usage of position players in the other seasons.

Great work, Mike. Very odd, indeed.

One thing to consider is field condition. Muddy fields in the early season was a persistent problem (one reason for so few games in April) and this could have continued well into May given the poor drainage in place for most if not all fields. Wet fields would slow done balls and runners and take quite a bite out of the offense in a game dominated by small-ball tactics. This supposition, if correct, would also account for offense slowing down in September.

The field condition hypothesis should apply to seasons generally, and so it seems to run into problems in June 1895. I’ll take a look at the summer pattern for ’93 and ’95 after I catch up on the snacking I missed, but given the season stats available on B-R, there doesn’t seem any reason to expect a 25-30% summer scoring bump in those years.

In 1893, the April/May R/G rate was 13.2; the June/July rate was 13.9. If dry fields was the cause of the rise in ’93, it was on the order of 5%, not 25%+.

I imagine the contraction from 3 major leagues in 1890 to just one in 1892 had some effect as well – we know expansion causes odd statistical quirks, and contraction is no different.

The only problem is that we would have expected to see that effect in 1892, not 1894. Also, the separation between major and minor leagues wasn’t quite as stark in the 19th century as it is now. And since the Players League took a lot of top shelf talent, a lot of the “major league” guys in the 1890 NL and AA really weren’t – they were fill-in guys who washed out with contraction.

Still, it’s another potential factor.

I’ve got a few guesses:

1. Statistical noise. I don’t think it’s implausible that this is just fluctuation due to whatever factors. A month of games in a… 12(?) team league isn’t that much, and things do fluctuate just because of this and that – weather, player injuries, randomness. So month-to-month, or even quarter-to-quarter, these trends aren’t impossible to attribute to simply noise.

2. Pitcher fatigue. Pitchers were workhorses back then, and sports conditioning was certainly not a thing. Although Doug’s wonderful graphs don’t go so far as to show average IP for starters in these years, the three main graphs do tell a tale that pitchers pitched fewer CGs, passed fewer inning thresholds (like 300 IP), and were relieved more frequently progressively in 1893 and 1894. It was harder work to throw the longer distance, and by June of 1894 they were really feeling that, but the historical precedent of CGs and keeping pitchers in took awhile to turn around. In 1893 scoring was up, which probably everyone could notice even without stats, but the easy explanation was the mound change. It maybe took until later in 1894 for teams to clue in that leaving their guy in was really letting the other team score too much. Maybe relievers were brought in more than before, but probably not enough, hence the higher run totals. Things take awhile to respond, I’m sure no one (in a position of power, at least – I believe there must have always been nerdy stats fans) was averaging R/G and noticing this trend.

3. As for why 1894 and not 1893… everyone was figuring it out. Pitchers and batters alike. They moved back the mound and runs went up just because of how huge that change was, but I’m sure it could have taken awhile for hitters to figure out how to optimize the opportunities that came with the increased distance. I’ve always been astounded by the end of the dead ball era and Ruth’s impact on the game – a stunning aspect of it was that he single-handedly showed people you could slug balls out of the park, but then soon enough, lots of guys were putting up comparable numbers, and a bunch of people were achieving what was unthinkable a few years earlier. But it didn’t happen overnight, innovations take time to take leaguewide hold. I think it’d be interesting to see which hitters might have had an increase in fortune pre-to-post 1893 – I wonder if that would give any clues as to what hitting strategies were successful in the new setup.

It would most likely be a combination of factors – maybe hitters got better immediately but took all of 1893 to tease out optimal strategies, then came out swinging (figuratively and possibly literally) in 1894, but the pitchers worked to hold them off, got tired earlier in the season than usual, and it took some time for management to realize they could and should take those pitchers out instead of leaving them in sometimes, a trend which took years to correct but eventually came more in balance to bring the R/G back down. Those are my thoughts.

“I think it’d be interesting to see which hitters might have had an increase in fortune pre-to-post 1893 – I wonder if that would give any clues as to what hitting strategies were successful in the new setup.”

I’m looking at that right now for a future post. Limited data is, alas, a significant constraint, but if I miss something in the analysis, I’m guessing our astute readers may pick up on it.

Incidentally, that limited data is why I couldn’t show you IP in relief vs. IP starting for this post.

A very thoughtful reply, bells. I particularly appreciate the detail of your thought experiment in the final paragraph, which really does address the issue of timing in various ways. However, of course, I’m going to push back on your ideas, which I’ll take in turn.

1. It is naturally possible that any phenomenon is the result of a confluence of so many factors that none can be seen as determinative, and we substitute “chance” for an explanation. When we’re looking at statistics (to the degree I understand them), that’s pretty much what “noise” is about, and it’s equally true that any statistical pattern may simply be a confluence of unrelated factors. However, my understanding is that the more well formed a pattern is, the less likely that “noise” will be a satisfactory answer to its underlying causes. Here are the consecutive months we are talking about, taking April/May as one month and eliminating season boundaries: 12.5 / 12.1 / 14.9 / 16.2 / 16.3 / 14.5 / 14.3 / 12.3. That may be noise, but it sure looks a lot more like the influence of a limited range of factors. I don’t think the answer of noise rises above its ever present theoretical possibility.

2. Pitcher fatigue is a good explanation for individual cases. However, the likelihood that a leagueful of pitchers would have such an apparent synchronicity of symptoms seems unlikely. You could argue, I suppose, that the slightly lowered rate of runs in April/May ’94 (12.1) represented manful efforts to counteract the “came out swinging” phenomenon, but there’s no indication that the hitters did come out swinging — that inference is based on what happens from June on: since they were swinging so hard then, they must have done so in April/May without actually seeing any results because the pitchers somehow knew what was happening and stepped up their game, and then the pitchers all gave out on Decoration Day . . .

3. Babe Ruth’s impact took a couple of years to fully influence play (his home run record of 29 in 1919 spurred the change in ball that led to his 54 HR in 1920). Here, you’re looking at a change that takes place over a few days. It’s true that on Decoration Day, Bobby Lowe famously hit four HRs, the first player ever to do so. Perhaps that galvanized the rest of the hitters (who had actually already begun to step up their production a couple of days before) to transform their game within days, but there’s no narrative record to support that. Lowe’s game looks like an effect of the sudden shift, not the cause, and there is no other narrative of a single player or two playing a role like Ruth’s. Again, as in #2, it’s the synchronicity of the effect that makes the explanation appear to rest on factors other than a series of individualized responses.

I think the response that has so far best addressed the particular shape of this phenomenon is Doug’s suggestion about muddy fields (although that tack doesn’t seem to work out, since there’s no comparable effect in 1893). The reason I think this is because Doug was working on the issue of synchronized timing (assuming, perhaps problematically, that fields all over the country would dry up at the same point in the year). At least on a scale of months, the 1894 hitting boom is puzzling precisely because it is such a discrete temporal event with so well-formed a shape of onset and subsiding. This is what makes explanations that rest on normal adjustments so difficult: “hitters/pitchers/managers adjusted . . .” becomes, “most hitters/pitchers/managers all adjusted at the same time . . .” The first is natural and plausible; the second seems unlikely, especially in light of the absence of any narrative record of a phenomenon that was so sudden and that, given synchronicity, must have been consciously noted by participants at the time.

Bot that I have anything better to offer. If Doug’s muddy-field answer is perhaps the most focused on the form of the problem I think yours is obviously most thoroughly thought out. . . . And I have no answer! (At least, none that is grounded in any form of data.)

I want to follow up my earlier reply to bells, which ended by saying, “Bot that I have anything better to offer . . .,” since my fingers couldn’t tell ‘b’ from ‘n’ on the keypad, by offering an idea that I think does have the form of a satisfactory explanation for the 1893-95 hitting pattern, but which is entirely imaginary: no information supports it and I’m not asserting that it is true, only using it as an illustration of the sort of explanation that would fit the facts.

(1) Imagine that in the 1893-94 off-season the NL contracted its season order for baseballs with a new company or with an continuing company that, out of error or on purpose, altered the standard for the balls in a way that made them livelier. (2) Imagine that the remaining inventory of 1893 balls was depleted in various parks over the period of, say, May 20 to mid-June, and the new balls came gradually into play over that short period. (3) Imagine that the resulting jump in run production, which would have stabilized at a higher level by the end of June, was recognized after several weeks by pitchers and managers, which led to various new strategies (earlier relievers, changes in pitch location, etc.) that, by September, began to show a limited but significant effect on cutting back runs. (4) Imagine that in the 1894-95 off-season, the NL contracted its season order for baseballs, and that, by chance or on purpose, the anomalous structure of the 1894 ball was not replicated, and the 1893 standards returned, the impact of which began to show up as the inventory of 1894 balls was exhausted in late May . . .

Now, I made all that up and there is no reason to think this is what happened. But it offers a simple, one-factor explanation for the data (the way that Doug’s muddy-field theory did, but in a way that is supported by, rather than refuted by, the fact that the phenomenon is not seen in other seasons). I think that potential explanations of this form have the best chance of being correct; unfortunately, even if one is correct, it’s unlikely we would ever actually know that it was.

Epm,

ML switched balls in 1977 (Spalding to Rawlings). In the NL, scoring increased only 0.42 R/G and it was noticeable and drew much commenting……AL had another variable-expansion- so I guess we would throw AL data aside.

Obviously, 1920 is another example but certainly not as drastic as 1892-1894

Paul,

I don’t really want to press my idea as a respectable hypothesis, because I have zilch to back it up — it’s just a model of how a hypothesis could fit the scoring data — but I noted on an earlier string that according to non-MLB testing reported on the website FiveThirtyEight.com, the baseballs used in last year’s season-long homer-fest were also, almost certainly without intent, juiced, despite meeting all the specs that MLB imposes on ball manufacture. I think this was in the back of my mind when the idea popped into my head to imagine a one-year manufacturing alteration in balls in 1894.

I doubt that in 1894 a mid-season change in run performance was noticed in real time, or that the types of statistical comparisons that were made in 1977 (when the change in manufacturer would have caused sportswriters to be on the alert for changes) would have led anyone to question the cause, other than that hitters were really turning it on. And, of course, the 1893 surge in scoring, which was fully expected, may have led to people viewing 1894 as simply more of the same. I have never seen any reference to the fact that early-season 1894 showed no rise in scoring, and, honestly, I wonder whether it’s ever been noted that after May, scoring jumped 28% through the end of the season.

not too related to anything in particular, Al Reach, inventor of the cork-centered baseball, sold his sporting goods company to Albert Spalding in 1889. But, if we’re gonna go red herrings:

1) Vitamin D shortage due to extremely overcast weather in damp spring initially weakened batters

2) Gamblers, in an effort to divert attention from overtly poor fielding by bribed fielders, begin bribing pitchers exclusively

Paul, I think that with a little refinement your hypotheses will be at least as good as mine — and you have two to my lonely singleton!

of anything that’s been postulated, proposed, suggested, or even joked, bells’ “pitcher fatigue” makes the most sense to me. I’m also digging “statistical noise” as acceptable and good enough to put this puppy to rest.

Thanks for the thoughtful reply as well, epm. One of the reasons I don’t participate in too many discussions here is because I simply don’t have time to come here and participate all the time, so I hope you’ll forgive the belated reply. Indeed it’s more than likely that we won’t be able to grab onto anything definitive through discussion, so I’m under no illusion as to the investigative reach of any points I’m making, but it’s an interesting thought experiment nonetheless. Just to reply in kind:

1. Your understanding of noise in statistics is similar to mine, but with a slightly different inflection on your second point. Yes, the more well-formed a pattern is, the less likely ‘noise’ will be an explanation, but my approach is somewhat more cautious – I put emphasis on avoiding the temptation to find an explanation to the point where it has people seeing patterns where there is just fluctuation. Yes, to a certain extent, ‘noise’ is when there are so many factors at play that they’re impossible to comprehend and explain, but it’s important to note that this doesn’t mean that they are systemic factors. I may get ten baskets in a row at basketball, but that doesn’t mean I’ve suddenly become better at basketball – it’s just a confluence of factors that are maybe interesting if I could understand them and break them down, but they’re small things in the synthesis of my ability, vision, feel, confidence, surroundings, etc that make up the ability to shoot a ball. Those things are hard enough to isolate and small enough that it’s more convenient to label it as random fluctuation around my baseline ability, even though there is something there.

Anyway, it can be lazy to posit that as an explanation for anything, but it’s important to note it as a possibility. Whether that rises above the background level it’s always at, I think, would depend largely on sample size. Is a month of 1894 baseball scores a big enough sample to have a 28% change be meaningful enough to overcome the relatively small sample size? You seem more bullish than me on it, although I’m certainly not discounting its meaning (and indeed tried to provide a possible explanation). Just as an example of reading into stats, my first thought was of dWAR, a stat I associate with alot of ‘noise’ and random fluctuation. My first player to think of looking at was the personification of consistency, Eddie Murray. Look at some numbers from the middle of his career for dWAR (starting age 30): -0.3, +0.4, -1.4, -0.1, -0.7. Should we say ‘what happened to Eddie Murray’s defense at age 32’ and try to find an explanation for those numbers? Or is it a more reasonable hypothesis to say that he was his regular consistent self and that the stats are within the realm of reasonable fluctuation? The example I pulled, of course, is affected by a different angle, namely that one could use it as a critique for the measurement of dWAR and suggest it’s a calibration issue more than a performance issue. Runs scored are a bit more of a solid measure. But my point is just that when I see those numbers you posted of monthly fluctuations, I view them with a similar eye of caution that I would those dWAR numbers, and don’t want to be too quick to assume there’s a meaningful explanation underneath.

2 & 3 go together – I think you’re not interpreting what I’m saying in the way that I intended. I brought up Babe Ruth and hitting not as an example of how hitters might have changed to hit more in 1894, but rather how it took awhile for managers to realize that they had to change their strategies around pitching to counter the change in hitting that was already underway. Baseball is always an interesting balance of batting and pitching, and I’m looking it more on the side of how pitching needed to change around the new rules more than how hitting was revolutionized. I don’t think it’s a matter of hitters suddenly figuring out how to hit, I think it’s a buildup of small, but cumulatively possibly systemic, factors. Here’s how my idea works – 1893, rules change, hitters do better, more runs are scored. In the offseason, maybe some hitters talk to each other about things that are working for them with the changed rules. Maybe pitchers do too. Who knows? Maybe, for example, the longer distance makes pitches easier to contact, and batters realize they can foul off pitches to work the count so the threat of a walk will give a more favorable pitch. Maybe pitchers talk about how they are changing strategies to work around batters. Maybe both of these strategies mean more pitches in an at bat, and cumulatively in a game, and at this time no one is thinking about fatigue or workload – pitchers just pitch until the game is done, that’s written in stone. So perhaps it’s not as accurate to say that batters come out swinging in 1894 as to say pitchers come out throwing. It works, for a bit, but throwing complete games with more pitches (without even having pitch count data, I would think there would be more pitches if there were more hits and runs than pre-1893, just because that would mean more batters) takes its toll. Every pitcher is different, but enough pitchers have been overused in the first few months that it doesn’t work as well anymore and the effects are seen in the data. Baseball orthoxody is slow to respond, and since runs already went up the prior year, a new run increase of 28% isn’t as noticeable as it would have been to managers, so it takes awhile before a strategy develops around maybe pulling pitchers a bit earlier. So it’s not like everyone suddenly discovers how to hit, or everyone suddenly forgets how to pitch, but enough cumulative factors come together to have an effect of something that has gradually been building.

But maybe we can just call that noise. Muddy fields or different baseballs would be great explanations too, and without more info it’s just guesswork. But I think my guess is not outrageous.

bells, Not everyone is retired and has the option to prattle endlessly online the way I do. Your posts are always thoughtful, and there’s nothing belated about your reply – I’m grateful you thought it worthwhile to write another detailed response to my arguments.

On your point #1, we really do see eye to eye on the issue of statistical noise, and, as you note, where we differ is on the likelihood that the 1893-95 runs scored pattern provides a data set robust enough to suggest that noise is an unlikely solution. In your comment, you point to the skimpiness of one month’s runs-scored data as a basis for taking a 28% rise as significant. I think that argument has some merit, but that is not what the data shows. The data concerns a well-formed pattern that extends over one full season and parts of two others, totaling 1267 games. And the rise in runs scored, although pivoting around a single month’s 23% rise, involves a total rise from the first two months of 1894 (Apr/May, 182 G) to the two midsummer months (July/Aug, 314 G) of 34.4%. Here are the figures:

Sept ’93……….146G (R/G 12.49, slightly below season ave. of 13.14)

Apr/May ’94….182G: -3.1%

June ’94………150G: +23.2%

July ’94……….157G: +8.8%

Aug ’94……….157G: +0.3%

Sept ’94……….152G: -11.1% (1894 R/G season ave: 14.70; to May 31, 12.09; after 15.47)

Apr/May ’95….183G: -1.3%

June ’95……….140G: -13.7% (R/G 12.31, slightly below season ave. of 13.16)

(Net change, Sept ’93 to June ’95: -1.4%)

Each of these units includes more than one team-season worth of games (seasons were 132 games). This is not like your example of hitting ten consecutive baskets. That mid-1894 28% rise I referred to was sustained over a period of 616 league games, or 78% of a season; the peak rise of 34% was sustained over 314 games, or 40% of a season. At both ends of the curve we see far lower season-long norms that are almost identical. I don’t think this can be considered a small sample size or a reflection of random fluctuations – it’s not a matter of fluctuation; it a sustained and well-formed curve. We can argue about dWAR – I agree that noise may sometimes overshadow performance when it comes to yearly fluctuations in dWAR, which is calculated with complex formulas, acknowledged to be imperfect reflections of on-field events – but I don’t see any relation to the straightforward statistical measure here.

On points #2 and #3, there is much we agree on, particularly with regard to the brake that was put on scoring in September ’94 that carried over into the spring of ’95. I think it’s extremely likely that throughout this period, pitchers were learning, effectively, how to adjust to the new pitching distance. If you chart the trend in R/G from 1892 to 1898, and skip 1894, this is what you see:

1892: 10.20

1893: 13.14

1895: 13.16 [12.83 if you eliminate Apr-May]

1896: 12.08

1897: 11.76

1898: 9.92

The overall trend seems clear and logical: the pitching mound change in 1893 caused runs to shoot up, and over time, pitchers adjusted more effectively every year until they had returned to the status quo ante. The September 1893 figure shows that they had made progress during 1893, which continued into the spring of ’94, and once the year-long run inflation of June 1894-May 1895 was over, that progress continued. During the run-inflation period, we see the same trend: after the three-month summer peak, pitchers regained some ground in September and into the following spring, before the R/G rate suddenly dropped back to its late-1893 level in June and remained in that range, gradually falling thereafter.

Within this long term trend line, only the period June-August 1894 stands out as a period when pitchers seem completely to lose control over the hitters. The peak 34% run inflation of those months – 40% of a season – exceeds the season-on-season run inflation that followed the 1893 move of the pitchers’ mound (29%), which was, at least until the 1920 lively ball, the single most dramatic alteration in the game in favor of hitters in its history.

Your hypothesis about the sudden rise in hitting / collapse in pitching at the end of May 1894 relies on a scenario of synchronization that I think does not fit the era. There was in those days very little consultation among players during the off-season: players scattered to their homes, took up their winter work, and communicated with other players, if at all, only through the US mail, long-distance phone calling being a luxury item. During the season, players on individual teams communicated daily, but the geographical separation of teams had a much greater impact (aside from Brooklyn and New York, then separate cities, no two teams were neighbors during the season, such that players could visit on off days), and conversations crossed team lines much more rarely. There was no national sports press to bring issues to the fore and cue players and managers what topics others were talking about. Moreover, when you suggest that the pitchers were exerting extraordinary effort in the spring of 1894 to counter the hitting rise of 1893, I think you are overlooking the much more obvious explanation: pitchers had already begun to solve the problem of pitching from 60’6” by late 1893, and the Apr-May ’94 rate simply continued the trend – a trend that suddenly returned at its former level one year later and continued for four more seasons. The spring data does not indicate exceptional effort; it indicates a normal progression continuing.

The strategy you are using in #2-3 is to imagine a confluence of moderate factors, all consistent with a normal range of fluctuations, that, combined, could account for an exceptional deviation in R/G. However, apart from the substantial odds required to bring all these factors into alignment in synchronization, this strategy ignores the fact that many other factors bearing on the game may have been taking place, some working in the other direction. For example – and here, once again, I’m making this up – is there any reason not to suppose that throughout the entire period 1893-1898, fielders were not adapting with increasing skill to the new pressures introduced by the mound reconfiguration and hitting improvement? Even if pitchers, who had been incrementally improving their response to hitters, had suddenly worn out in June 1894, wouldn’t that effect have been buffered by increased improvements in fielding? When imagining a complex configuration of causes that could explain an outcome, it’s important, I think, to bear in mind that this almost always involves a reduction of real like to only those components that work in favor of the explanation.

Well, I’m now just beginning to repeat my points – ok: more truthfully, I’m continuing to repeat them over and over. But I do have one new data point to add that I was not aware of before, and that tempts me to strengthen my “lively ball” hypothesis into an actual claim. In looking for information about the 1894 season, I came across this passage on John Thorn’s blog:

The hitting explosion produced, at its zenith in 1894, a league ERA of 5.32, a team batting average of .349 (for the fourth-place Phillies), and nearly twice as many walks as strikeouts. That such a boost could have been anticipated was demonstrated by a little-known experiment in the Players League of 1890. In its attempt to win fan favor through increased scoring, the rival major league moved its pitching box back 1.5 feet and, with the addition of a new lively ball, produced a batting average twenty points higher than those in the two established major leagues.

This is the first I knew of the fact that any 19th century league had experimented with changes in the ball, designed to increase run production and draw crowds. It establishes a real precedent for the idea I was suggesting only as a thought experiment. We do know that the changes of 1893 were designed to increase scoring and draw crowds, and also that they worked – scoring rose almost 30% and attendance rose 28%. I kind of blew off Mike L on this string when he suggested that the Panic of 1893 might have had some connection to the increase in runs, but now I’m wondering whether, given the Players League precedent, the “magnates” might not in 1894 have thought that they might need to pour a little more gas on the fire to ensure that in tough economic times attendance and their income did not retreat from the levels of the prior season, and, having borrowed the Players League idea of the elongated pitching distance the year before, have done what I imagined above: ordered lively balls to be used during the ’94 season. If John Thorn, the “Official Historian of MLB,” sees some sort of link between the Players League innovations and the ’94 hitting explosion, perhaps there’s something to it.

Well, if I were retired, I could think of worse ways to spend my time than immersing myself in baseball stats and positing ideas about some stories behind them. I can only dream.

Your points are well taken, and I’m not so attached to the ideas I put forward that I’d choose them as my hill to die on, so I’ll just say that I appreciate the thoughtful back-and-forth, and want to put forward a couple of small clarifications. First, I didn’t mean to bring up hitting consecutive baskets or dWAR as anything more than analagous fluctuations that it’d be dangerous to put narratives behind. I don’t think they’re similar stats in any other sense. The increase from May to June looks dramatic on its own, but I figured I might as well make an effort to look into the data a bit myself, so I took something I’m a bit more comfortable with, which is standard deviation (I teach intro stats at university so I’m great with the real simple stuff). Taking the average of the 6 monthly R/G figures you put in your first post, here are the standard deviation scores for the months of the season:

April: -1.11

May: -1.27

June: 0.29

July: 0.99

August: 1.04

September: 0.07

There’s obviously a sequential pattern, but I don’t feel like any of these numbers is an outrageous outlier – if you mapped them on a normal distribution, they’d all fall well within the realm of probability. The sudden jump is dramatic, and sustained, but (I don’t think) outrageous. So I definitely think something *could* be going on, just not convinced something *is*. I look at the number of games in a month and don’t see a big enough sample size to determine anything conclusive; you do. We’re just seeing the same phenomenon with slightly different takes, to-may-to to-mah-to. (to-may-to is the correct way to say it FYI)

Second, I meant to say just about the same thing you’re saying – because there was probably less leaguewide communication, things weren’t figured out right away. They just looked like they happened suddenly because of a few small factors that happened at the same time as a normal fluctuation, is my theory. But again, not the most robust theory. Honestly, something to do with a different ball would be a much, much more plausible explanation.

I see Doug’s posted about hitters from the same time. That should be fun to explore too. Thanks again for the discussion!

To show how close we are, bells, I grew up in a home where ‘to-mah-to’ was correct, along with a number of other hifalutin forms meant to signal that we’d left the old country behind. Among them all, ‘to-mah-to’ is the only one that as an adult I consciously abandoned, though it took a lot of practice ignoring the parental corrections echoing in my head, and every time I mention tomatoes I taste a mild spice of irony.

Stats is not my natural game: my gig was teaching kids how to decipher inscriptions, which is less useful here than one might hope. I think it’s time I bought myself a dummies’ book for stats to try to keep up a little (I make ‘no statistician but’ look like a pro). But I enjoyed this discussion nevertheless.

See you next string.

Quick thanks from someone who stayed up far too late watching election returns. The community here is smart, informed, and always civil.

It’s eerily like our political discourse, isn’t it: calmly intelligent, fact based and critically reasoned, and, of course, polite to a fault. It’s nice to think that HHS is just a reflection of America at large.

For those so inclined, a new quiz offering is available here.

They all have 500+ G, 0 GS and ERA+ >= 135, but so do 11 others

Should be 10 others.

How about all of the above AND IP > 1 x G ?

Pingback: Sixty feet six inches and the birth of the power hitter |

Pingback: The Old Baltimore Orioles and the Transformation to Modern Baseball |